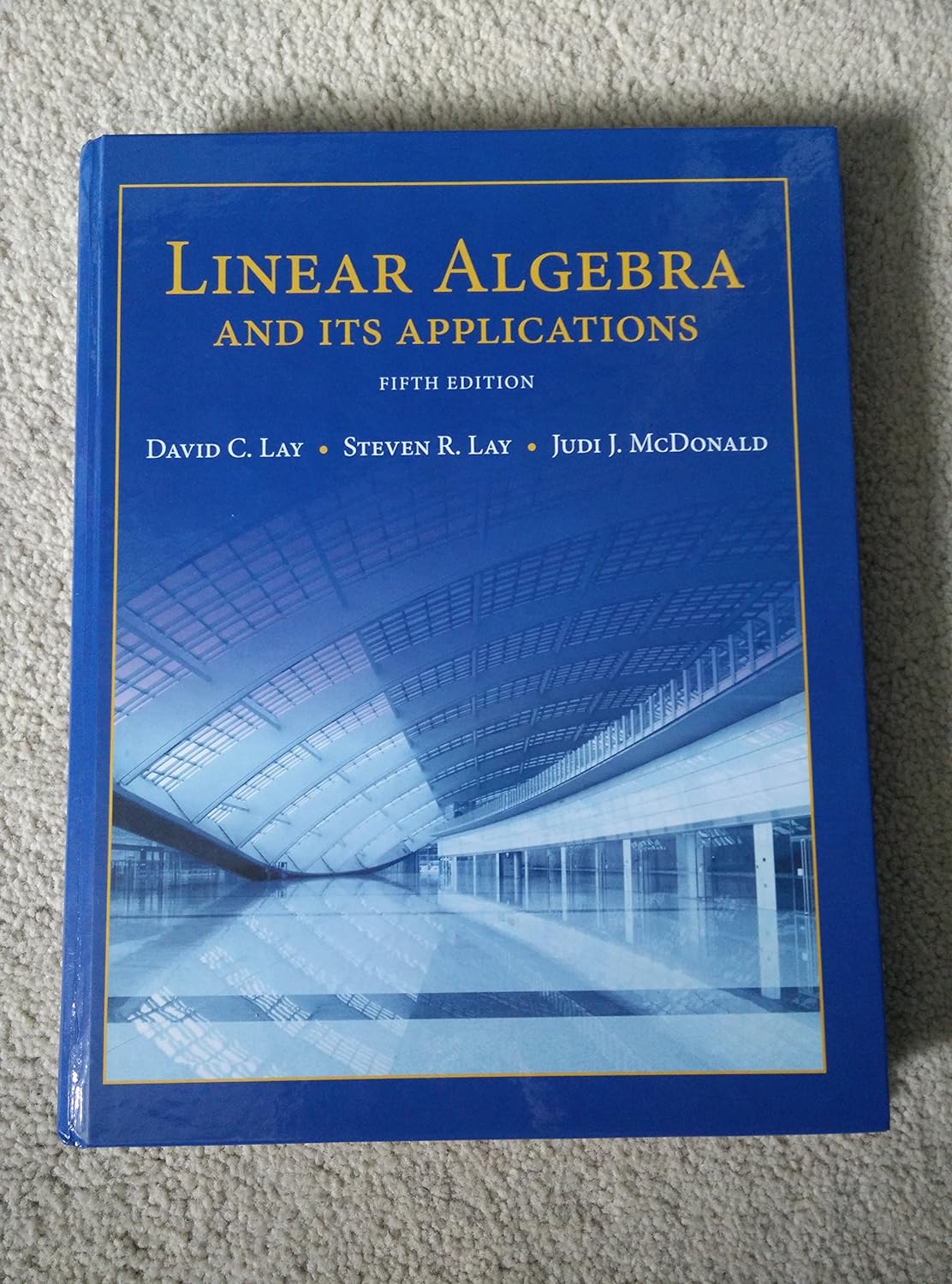

Linear Algebra and Its Applications

- Brand: Unbranded

Description

Linear algebra is central to almost all areas of mathematics. For instance, linear algebra is fundamental in modern presentations of geometry, including for defining basic objects such as lines, planes and rotations. Also, functional analysis, a branch of mathematical analysis, may be viewed as the application of linear algebra to function spaces. Linear Algebra and its Applications publishes articles that contribute new information or new insights to matrix theory and finite dimensional linear algebra in their: A vector space over a field F (often the field of the real numbers) is a set V equipped with two binary operations satisfying the following axioms. Elements of V are called vectors, and elements of F are called scalars. The first operation, vector addition, takes any two vectors v and w and outputs a third vector v + w. The second operation, scalar multiplication, takes any scalar a and any vector v and outputs a new vector a v. The axioms that addition and scalar multiplication must satisfy are the following. (In the list below, u, v and w are arbitrary elements of V, and a and b are arbitrary scalars in the field F.) [8] Axiom

The study of those subsets of vector spaces that are in themselves vector spaces under the induced operations is fundamental, similarly as for many mathematical structures. These subsets are called linear subspaces. More precisely, a linear subspace of a vector space V over a field F is a subset W of V such that u + v and a u are in W, for every u, v in W, and every a in F. (These conditions suffice for implying that W is a vector space.) It also publishes articles that give significant applications of matrix theory or linear algebra to other branches of mathematics and to other sciences provided they contain ideas and/or statements that are interesting from linear algebra point of view. For every v in V, there exists an element − v in V, called the additive inverse of v, such that v + (− v) = 0dim ( U 1 + U 2 ) = dim U 1 + dim U 2 − dim ( U 1 ∩ U 2 ) , {\displaystyle \dim(U_{1}+U_{2})=\dim U_{1}+\dim U_{2}-\dim(U_{1}\cap U_{2}),} For example, given a linear map T: V → W, the image T( V) of V, and the inverse image T −1( 0) of 0 (called kernel or null space), are linear subspaces of W and V, respectively. a 1 , … , a m ) ↦ a 1 v 1 + ⋯ a m v m F m → V {\displaystyle {\begin{aligned}(a_{1},\ldots ,a_{m})&\mapsto a_{1}\mathbf {v} _{1}+\cdots a_{m}\mathbf {v} _{m}\\F Let V be a finite-dimensional vector space over a field F, and ( v 1, v 2, ..., v m) be a basis of V (thus m is the dimension of V). By definition of a basis, the map When V = W are the same vector space, a linear map T: V → V is also known as a linear operator on V.

a 1 v 1 + a 2 v 2 + ⋯ + a k v k , {\displaystyle a_{1}\mathbf {v} _{1}+a_{2}\mathbf {v} _{2}+\cdots +a_{k}\mathbf {v} _{k},} Another important way of forming a subspace is to consider linear combinations of a set S of vectors: the set of all sums

Password Changed Successfully

T ( u + v ) = T ( u ) + T ( v ) , T ( a v ) = a T ( v ) {\displaystyle T(\mathbf {u} +\mathbf {v} )=T(\mathbf {u} )+T(\mathbf {v} ),\quad T(a\mathbf {v} )=aT(\mathbf {v} )} If any basis of V (and therefore every basis) has a finite number of elements, V is a finite-dimensional vector space. If U is a subspace of V, then dim U ≤ dim V. In the case where V is finite-dimensional, the equality of the dimensions implies U = V. There exists an element 0 in V, called the zero vector (or simply zero), such that v + 0 = v for all v in V. Linear maps are mappings between vector spaces that preserve the vector-space structure. Given two vector spaces V and W over a field F, a linear map (also called, in some contexts, linear transformation or linear mapping) is a map T : V → W {\displaystyle T:V\to W} x 1 , … , x n ) ↦ a 1 x 1 + ⋯ + a n x n , {\displaystyle (x_{1},\ldots ,x_{n})\mapsto a_{1}x_{1}+\cdots +a_{n}x_{n},}

The procedure (using counting rods) for solving simultaneous linear equations now called Gaussian elimination appears in the ancient Chinese mathematical text Chapter Eight: Rectangular Arrays of The Nine Chapters on the Mathematical Art. Its use is illustrated in eighteen problems, with two to five equations. [4]A set of vectors that spans a vector space is called a spanning set or generating set. If a spanning set S is linearly dependent (that is not linearly independent), then some element w of S is in the span of the other elements of S, and the span would remain the same if one remove w from S. One may continue to remove elements of S until getting a linearly independent spanning set. Such a linearly independent set that spans a vector space V is called a basis of V. The importance of bases lies in the fact that they are simultaneously minimal generating sets and maximal independent sets. More precisely, if S is a linearly independent set, and T is a spanning set such that S ⊆ T, then there is a basis B such that S ⊆ B ⊆ T.

- Fruugo ID: 258392218-563234582

- EAN: 764486781913

-

Sold by: Fruugo